Named Entity Recognition in the Legal Domain

Financial institutions such as investment banks or mortgage companies have access to rich sources of unstructured textual data, for example legal documents and corporate reports.

These documents include information of paramount importance which can be used to drive business value. Information Extraction (IE), which is responsible for extracting relevant information from unstructured data, consists of several subtasks including named entity recognition, coreference resolution, and relationship extraction.

This is the first of two blog posts discussing RelationalAI’s work towards Named Entity Recognition (NER) in the legal domain, describing a human-in-the-loop approach to annotating a large number of legal documents.

Our work was accepted as a contribution in the Industry and Government Program of the IEEE Big Data 2022 Conference, and will be presented on December 19, 2022.

You can read our paper here.

What Is NER?

NER is one of the most pivotal IE tasks and a fundamental block in a variety of important applications, such as knowledge base construction and semantic search.

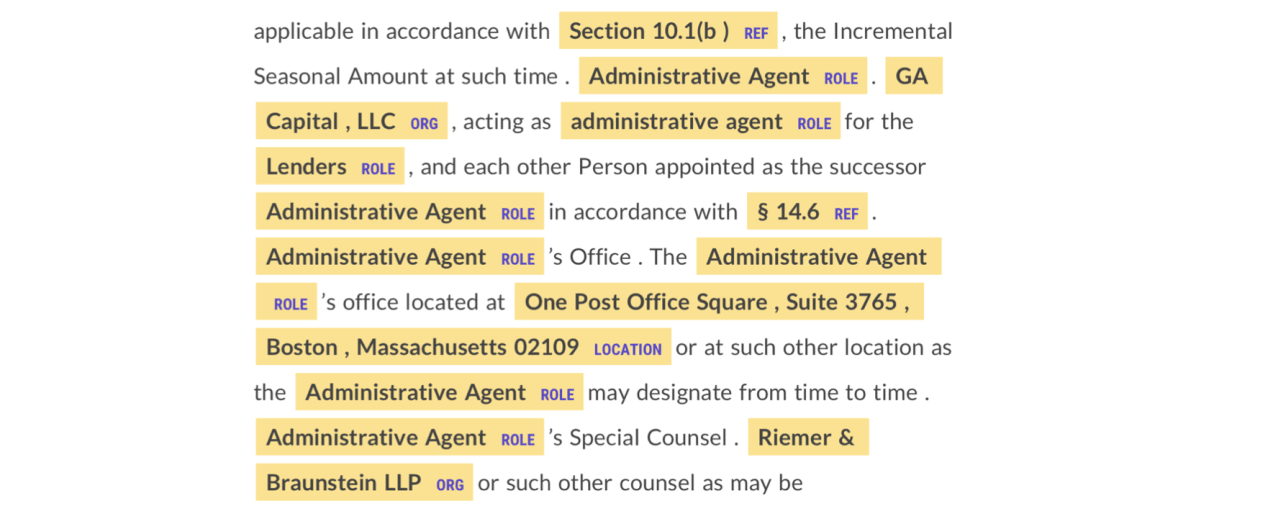

NER is the task of identifying instances of a predefined set of entity types in a text document, for example location or organization. Figure 1 exhibits four types of entities in the cover page of a loan agreement document.

You may wonder what makes the application of NER in legal documents so interesting to study?

The answer lies in the fact that legal documents, like most text documents, exhibit specific characteristics that make extracting useful information more challenging. Legal documents are usually long documents with a deeply hierarchical structure and hyperlinks that are human readable only.

Figure 2 shows the hierarchical content and human readable links in a sample loan agreement document.

Generating Labeled Data for NER in the Legal Domain

Building a robust machine-learning model for solving legal-domain NER has a major upfront cost: generating a large amount of labeled examples. The existing general-purpose (e.g., CoNLL2003) and domain-specific (e.g., JNLPBA for the biomedical domain) NER datasets do not cover a wide variety of legal-domain entities, which makes it necessary to generate high-quality annotated legal documents for training language models.

Manually annotating numerous entities in legal documents is a costly and time-consuming task. As a result, the number of publicly available legal datasets in the natural language processing (NLP) domain is very small (Chalkidis et al. (2017) and Hendryks et al. (2021) are two notable exceptions).

Data-Centric AI

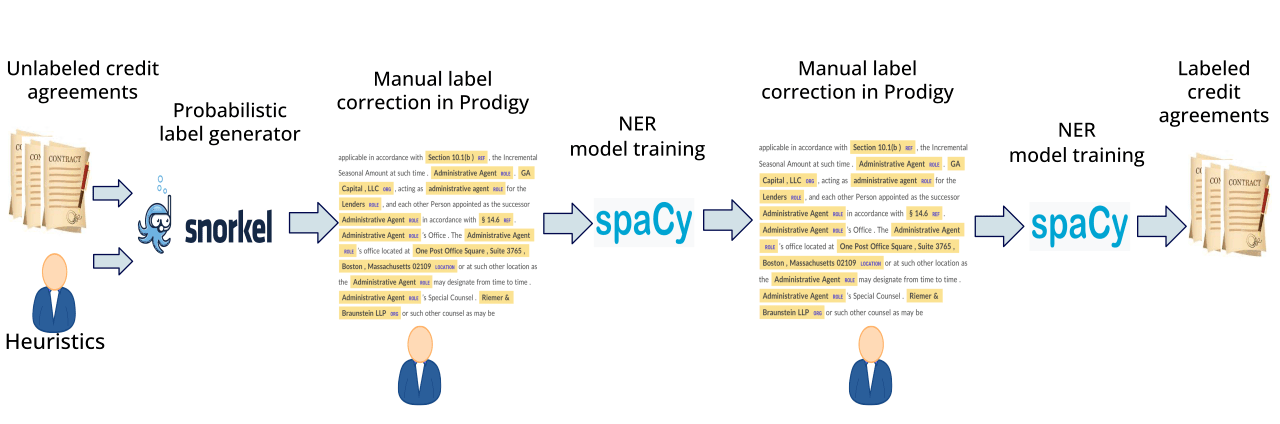

RelationalAI proposes a human-in-the-loop strategy for generating a large number of labeled examples. The proposed framework combines both manual labeling and automated procedures in an iterative process to generate high-quality annotations.

The method for iteratively developing ground truth data is motivated by the principles of data-centric AI. In the data-centric philosophy, developing a programmatic process for collecting, cleaning, labeling, and augmenting training data is the key factor for progress for AI development.

Following this philosophy, we don’t view the ground truth labels as a static input that is completely separated from the model development.

The advantage of our approach is that given a very small set of manually annotated legal documents (less than 25 in total), our human-in-the-loop iterative framework can produce a large number of near gold-standard labeled examples. The number of documents can even be as big as the initial corpus.

RelationalAI’s NER Framework

We used the proposed framework to annotate the following 11 distinct entity types in loan agreement documents crawled from the U.S. Securities and Exchange Commission (SEC) platform:

Amount Date Definition Location Organization Percent Person Ratio Reference Regulation Role

Figure 3 summarizes the proposed strategy in five steps.

Generating Noisy Labels

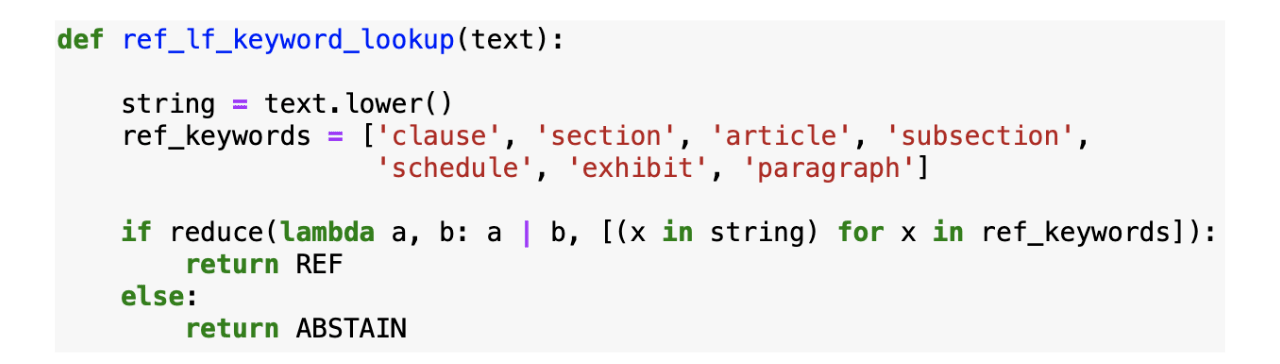

To generate noisy labels for a small number of legal documents (13 in our case), we combine multiple sources of possibly correlated heuristic rules, also known as labeling functions, with the help of a weak supervision paradigm, namely Snorkel Ratner et al. (2017).

Figure 4 presents an example of a Snorkel labeling function for identifying reference entities given an input text, for example, a span of five lowercase, space-separated words.

In brief:

Snorkel learns a generative model to combine the output of heuristic rules into probabilistic labels without access to ground truth. Generating gold-standard annotations from Snorkel predictions is faster than annotating from scratch. Snorkel produces decent --- but far from gold standard --- labels.

Manually Correcting Noisy Labels

For the small number of documents for which we generate noisy labels, we manually correct the deficiencies and add annotations that were missing by using the Prodi.gy annotation tool.

Prodi.gy provides a straightforward user interface that allows manual annotators to correct or add new annotations quickly. At least two annotators work on fixing the deficiencies for each document. In case of disagreements, the annotators come together to reach a common consensus.

Training an NER Model

We use the gold-standard labels generated in the previous step to train the spaCy v2.0 NER model, which uses a deep convolutional neural network with a transition-based named-entity tagging scheme.

This is a small and simple language model, however it achieves relatively good performance with a small number of labeled examples.

Manually Correcting Less Noisy Labels

We use the trained spaCy v2.0 NER model to find named-entities in new legal documents. Then, we manually correct the mistakes in the predicted labels, augmenting the gold-standard data, using a similar approach to manually correcting noisy labels.

The labels produced here are of much higher quality compared to the labels produced with heuristic rules. This significantly speeds up the process of correcting deficiencies and generating gold-standard annotations.

Retraining the NER Model

We retrain the spaCy v2.0 NER model on the new augmented gold-standard dataset produced during the manual correction of less noisy labels. We use this NER model to annotate thousands of legal documents to generate near gold-standard (NGS) labels.

The manual inspection of a small portion of the near gold-standard data produced verified the high quality of the annotations. In fact, most mis-predictions are either confusing to human annotators or are one-off cases.

Figure 5 shows an example page from the NGS labels in the Prodi.gy web-based platform.

A Scalable Solution

Named entity recognition is a difficult challenge to solve, particularly in the legal domain. Extracting ground truth labels from long, hierarchical documents is often slow and prone to error.

RelationalAI proposes a new, scalable algorithm based on the principles of data-centric AI, designed to meet this challenge and generate high-quality annotations for the named entity recognition task with minimal supervision.

In our next post, we will look at existing approaches to NER in more detail, and discuss our series of NER experiments using a large corpus of publicly available loan agreement documents and the ground truth labels generated.